As part of the Coalition’s mission to make better public tools to understand open records and meetings law, we are proud to announce a Status Check feature that has been added to our Sunshine Library!

Attorney General opinions and decisions, like rulings of the courts, can be changed months or years later by a future AG publication, often in response to changes in statutes or court rulings. Before today, the process of checking whether an opinion or decision of the Kentucky AG had been modified, withdrawn, or overruled was an onerous process that had to be performed manually. Thanks to the growing power of AI, specifically Large Language Models (LLM) like what power ChatGPT, we are able to offer a new collection of citation treatments generated by GPT-4 and selectively reviewed for accuracy by coalition directors.

Lawyers are familiar with the process of “Shepardizing” or using “KeyCite” from the big legal publishers, referring to the process of validating that any cases cited are still good law. Our Status Checks, while not able to fully replicate the thoroughness of the major legal citators, provide important context to the public about how future Attorney General publications have treated older opinions.

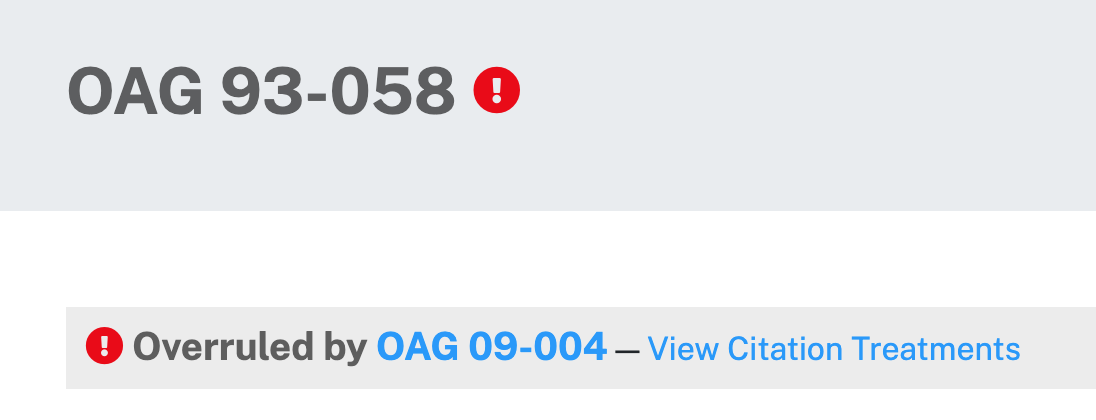

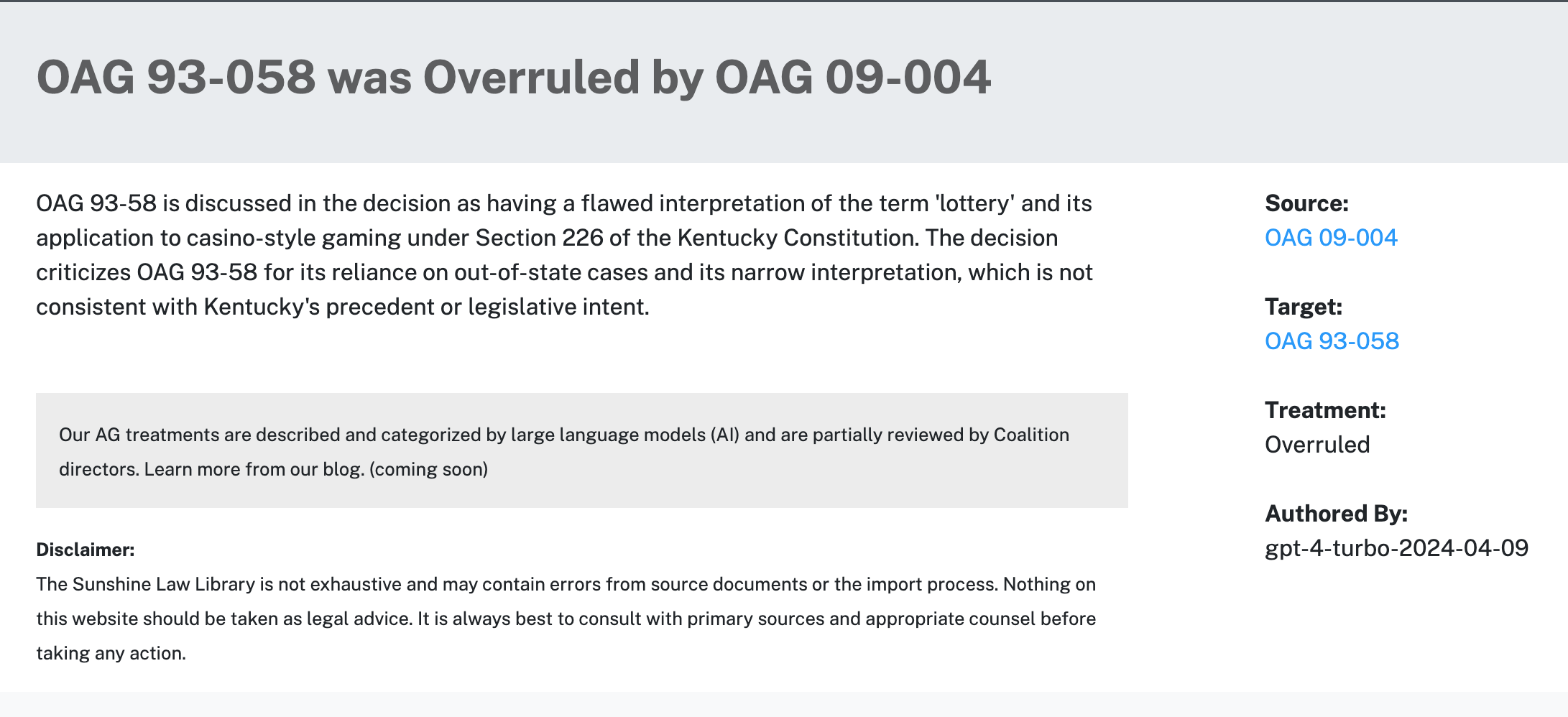

When you visit an AG opinion or decision in our library, entries that have been altered by a later authority have a red icon next to the title citation, with a message identifying the later publication responsible for the change.

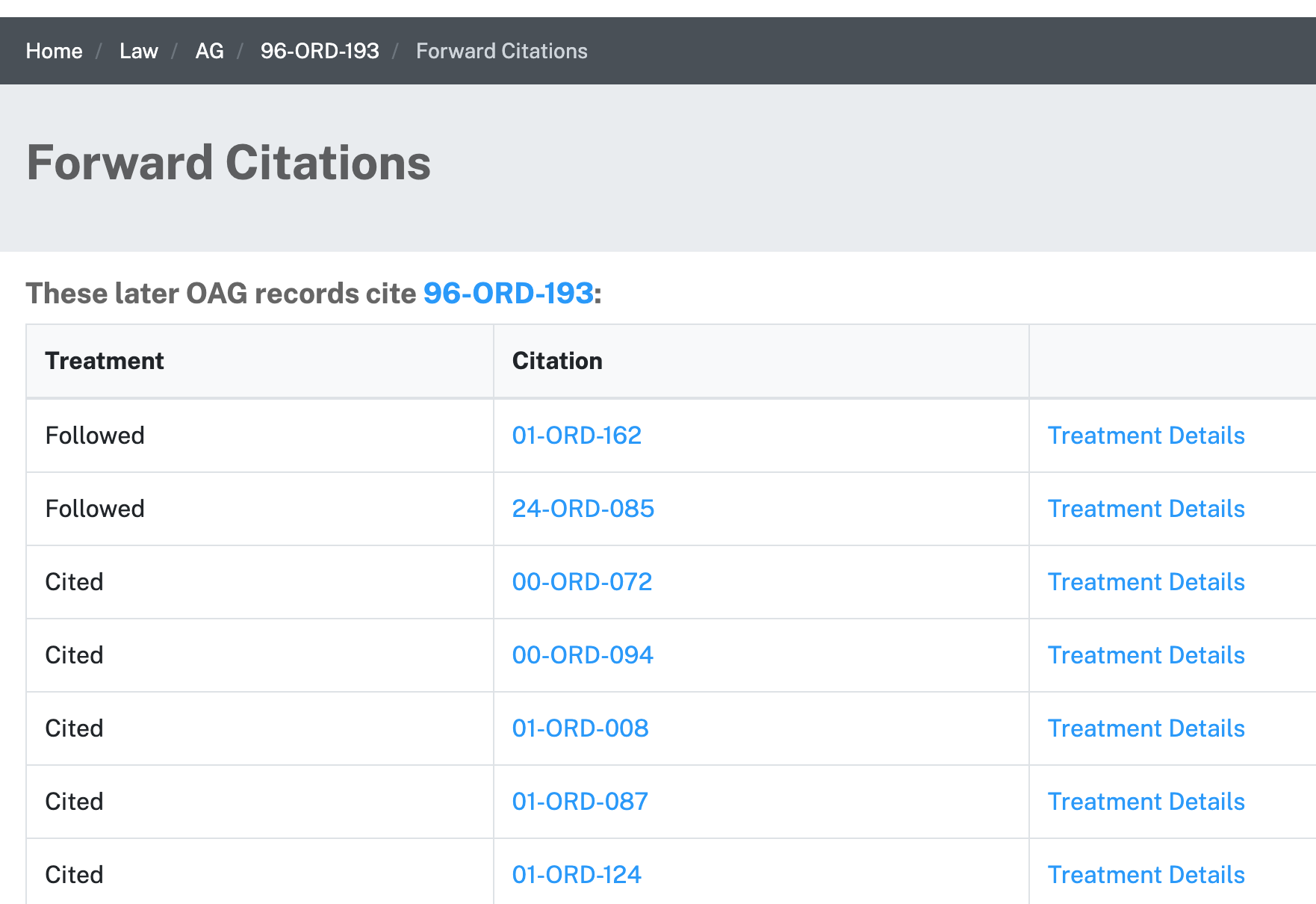

The library's opinions and decisions previously included a list of future records citing them, and this list has been updated to reflect how the newer publication treated the citation (Followed, Modified, Overruled, Withdrawn, or Cited).

Each of these AI Status Checks also has an AI-generated explanation. During development, this was actually generated before the treatment category, a technique known as “chain-of-thought” prompting. Clicking on “Treatment Details” will show the explanation for the treatment along with details about when it was generated.

Methodology

Great care has been taken in developing and rolling out this feature to the public, and it deserves transparency in methodology and coverage. To understand the source of the AG data and how we got to the beginning of the Sunshine Law Library, check out our previous blog post and the introduction to the library.

Current LLMs accept what is referred to as a “system prompt” that gives the AI model context for how it should respond to user inputs. The final prompt used in our library resulted from several weeks of small tests using citations from 1992 and 2000 as test cases, where several opinions that overruled previous opinions had been identified through keyword searches. This is system prompt that was used for the majority of the citations in our database:

You are an AI paralegal assistant shepardizing opinions and decisions of the attorney general of Kentucky.

### Instruction:

For each of the "references", provide an explanation for why it is cited in the "decision" provided. Pick the most appropriate category for how this decision treats the cited case:

Possible keys for the "treatment" are:

F - Followed, when the decision follows reasoning from the citation;

M - Modified, when the decision expressly modified the cited previous decision;

W - Withdrawn, when the decision expressly withdraws the cited previous decision;

O - Overruled, when the decision expressly overrules or reverses the cited previous decision;

C - Cited, for all other valid citations, such as distinguishing cases or when a citation is otherwise discussed without being modified, withdrawn, or overruled; or

N - Not found, when the citation is not found in the decision text.

Give a "title" in the format, "[cited case] was [treatment verb] by [decision]", ex. "96-ORD-176 was Modified by 17-ORD-197". The "treatment" should just be the letter key from the above list.

Make sure that your treatments reflect how the full text your read treats the cited case, and not the agency response.

Finally, provide a brief "summary" of the decision.

Your response must be correct JSON that uses these interfaces:

interface Reference {"explanation":string;"title":string;"treatment":string;}

interface Ouput {"references":{["citation":string]:Reference};"summary":string;}

In early testing, I did not have the model explain its treatment or provide the “title”, and I found consistently better performance once I added this step before the final treatment was calculated. The “title” was added to the prompt about ⅓ of the way through the full process, as I found it better kept the model from applying the “modified” category when an opinion was using a citation to require an agency modify its own position.

I manually reviewed all treatments where the model applied the modified, overruled, or withdrawn category and corrected a handful of mistakes I found. There were some cases where the official treatment was somewhat ambiguous, and for these I consulted with Coalition Co-Director Amye Bensenhaver, a 26-year veteran author of AG opinions. We decided on a conservative approach of only considering a previous opinion to be altered when explicitly done so by a future opinion; there are some AG records that disagree with older opinions while not explicitly altering them, and we have left those treatments with the generic “Cited” category.

In terms of model selection, I did early tests with open source LLMs including Llama 2, Mistral, and Gemma, but these had major issues with more complex opinions and do not support the larger context windows that commercial models now do. A small test with Claude 3 Sonnet had moderately successful results, however cost concerns prevented a larger rollout, and there were errors in nuanced treatments that may have necessitated an increase to the large (and more expensive) Claude 3 Opus model.

This project was made possible through the generous support Microsoft provides to nonprofit organizations in the form of Azure compute credits. The coalition was awarded a $2000 grant for its cloud compute platform, which enabled this large scale project to come to fruition. After a series of tests against records from 1992 and 2000, the most recent GPT 4-Turbo model was selected for its balance of speed, accuracy, and reduced price compared to the full GPT 4.

For the initial launch, the library has generated 58,462 citation treatments from the 15,301 AG opinions and decisions we currently maintain at a cost of $805. This breaks down to an average of $0.0526 per AG record or $0.0138 per citation treatment.

In addition to the historical AG records we keep in the library, our import process for new opinions and decisions published every Monday now includes automatic AI extraction and processing of citation treatments. We hope to maintain the Sunshine Law Library as the best public resource for researching questions of open government in Kentucky.

Limitations

Here I want to call out some limitations that currently exist in our Authority Status system:

-

Some citations were not correctly parsed out of the source document and treatments were not generated. I had separately written code (using traditional regex) that extracted citations to previous OAGs, ORDs, and OMDs using various formats I found in the source documents. I did find areas during my review were citations were missed due to atypical presentation in the source documents.

-

Some Attorney General records include typos, including citation typos that make incorrectly references. These have been corrected and noted where found, but there may be others that were not identified.

-

Status Checks are limited only to subsequent treatments by the Attorney General’s Office and not by the courts. Open records and meetings decisions can be appealed to the Circuit Court in the Agency’s county, and the Court can issue a ruling that is binding only on the parties in that case. Only cases that receive final appellate review or ruling become new statewide law, but these are not currently reflected in the Status Checks unless the AG’s office expressly updated a previous authority in the publication of a new authority.

-

The majority of Cited and Followed treatments were not reviewed by Coalition directors.

Your Help

The Status Status feature represents a significant milestone in our mission to make open government research more accessible and transparent. By leveraging the capabilities of AI, we have transformed a previously arduous manual process into an efficient, user-friendly experience. We are excited about the potential impact of this feature and are committed to continuous improvement. As we move forward, we will continue to refine our process, address any limitations, and improve the search and explanation capabilities across the library.

We invite you to explore the Authority Status feature and share your feedback. Your input is invaluable in helping us enhance the Sunshine Library and ensure it remains a premier resource for open government research in Kentucky.

If you have any feedback regarding any of our treatments or this process, we encourage you to reach out to us with our contact form.